🗸 Gitea Actions Part2 - Jekyll-Dockerimage

What happened so far

I wasn’t able to set up the CI/CD pipeline in Part1 of this sequel. I tried with a minor modified copy of the Github-Action to build Jekyll for Github Pages. Now I wanted to try running Jekyll as a Dockerimage right from the start. There wasn’t too much choice on the Docker hub, still I found jvconseils jekyll-docker which seems well-documented and actively maintained at year-end 2023.

“Set up job”

So I add the Docker-jekyllimag to my workflow:

# workflows/jekyll-build-action.yml

jobs:

# Build job

build:

runs-on: ubuntu-latest # this is the "label" the runner will use and map to docker target OS

container: jvconseil/jekyll-docker

# [...]

Here I’m telling gitea act_runner to run with ubuntu-latest label which in my case points to a minimalistic node16:bullseye machine (Debian11). I’m then telling docker to load the jekyll-docker image, where the dependencies I require for my build are already loaded.

bundle install

Well, initial build fails due to ruby dependencies that are specified in my project’s Gemfile which are not present yet. The error message looks like this:

bundler: failed to load command: jekyll (/usr/gem/bin/jekyll)

/usr/local/lib/ruby/gems/3.2.0/gems/bundler-2.4.22/lib/bundler/resolver.rb:332:in `raise_not_found!': Could not find gem 'github-pages' in locally installed gems. (Bundler::GemNotFound)

from /usr/local/lib/ruby/gems/3.2.0/gems/bundler-2.4.22/lib/bundler/resolver.rb:392:in `block in prepare_dependencies'

Bundler is Ruby’s packet manager. Similar to npm for Javascript or pip für Python, bundler is able to load and bind dependencies and libraries for applications running in Ruby. The Gemfile specifies the packages for bundler to install.

So let’s add bundle install to the script like so:

# workflows/jekyll-build-action.yml

# [...]

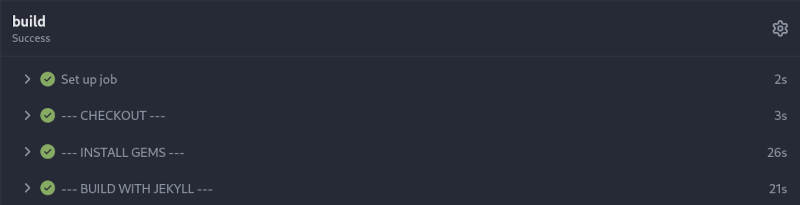

steps:

- name: --- CHECKOUT ---

uses: actions/checkout@v3

- name: --- INSTALL GEMS ---

run: bundle install # will fail with permissions rights to write to Gemfile.lock but anyways installs required dependencies.

- name: --- BUILD WITH JEKYLL ---

# Outputs to the './_site' directory by default

run: bundle exec jekyll build --destination /opt/blog_staging

env:

JEKYLL_ENV: production

act_runner: no write permissions to Gemfile.lock

This fails again. act_runner:

gitea-runner-1 | [Deploy Jekyll site/build] | `/workspace/schallbert/blog/Gemfile.lock`. It is likely that you need to grant

gitea-runner-1 | [Deploy Jekyll site/build] | write permissions for that path.

gitea-runner-1 | [Deploy Jekyll site/build] ❌ Failure - Main ---INSTALL GEMS ---

gitea-runner-1 | [Deploy Jekyll site/build] exitcode '23': failure

After a lenghty search I saw that Gemfile.lock wasn’t added to version control on my local machine because it is mentioned in .gitignore. So bundler in act_runner now tries to create it out of the given Gemfile without success as it only has read access to the checked-out dataset.

To solve this, I add Gemfile.lock to version control, removing it from .gitignore. As an additional benefit I make sure to have identical build environments locally and remote.

If you don’t want this, you can also create and handover Gemfile.lock to the Bundler user during the action run (chown Bundler Gemfile.lock), refer to the Bundler Website for more information.

Dependency: sass-embedded

Less than 20sec into the build I get the following error:

Resolving dependencies...

Could not find gem 'sass-embedded (= 1.69.5)' with platform 'x86_64-linux' in

rubygems repository https://rubygems.org/ or installed locally.

After some more searching, I found a resolution: Specify a certain version of jekyll-sass-converter in the action or, alternatively, add the gem “github-pages” to the Gemfile. The latter manages to load a working version of CascadingStyleSheets (CSS) Preprocessor Sass.

Finally, bundle install finishes successfully:

✅ Bundle complete! 5 Gemfile dependencies, 43 gems now installed.

jekyll build

As I want act_runner to save the build output on my host machine, I add a Volume to Gitea’s docker-compose.yml:

# gitea/docker-compose.yml

# [...]

volumes:

- ./runner/blog_staging:/opt/blog_staging

- ./runner/data:/data

- /var/run/docker.sock:/var/run/docker.sock

Let’s retry the build:

Destination: /opt/blog_staging

Generating...

Jekyll Feed: Generating feed for posts

jekyll 3.9.3 | Error: Permission denied @ dir_s_mkdir - /opt/blog_staging

/usr/local/lib/ruby/3.2.0/fileutils.rb:406:in `mkdir': Permission denied @ dir_s_mkdir - /opt/blog_staging (Errno::EACCES)

from /usr/local/lib/ruby/3.2.0/fileutils.rb:406:in `fu_mkdir'

Also this problem can easily be solved: Either you build to /tmp, where the jekyll user has access. Or you hand over the output directory like so: chown -R jekyll /your/build/output.

With this change, I get successful action runs.

Transfer artifacts

Where do I find the build artifacts now? I don’t see any _site folder: Neither on the host machine’s volume, nor in the Gitea or Runner containers.

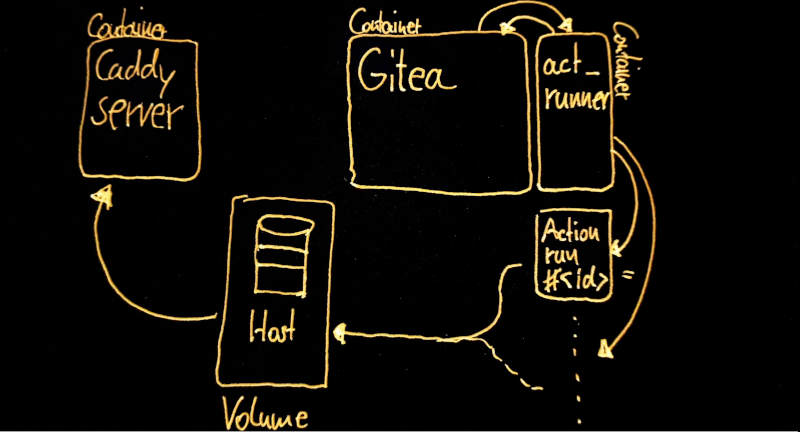

Docker volumes for act_runner cannot share build artifact

This seems logical as I learn the mechanics of act-runner: It will spawn an own action Dockerimage with own volumes that are associated by Task-ID.

DRIVER VOLUME NAME

--> local GITEA-ACTIONS-TASK-84_WORKFLOW-Deploy-Jekyll-site_JOB-build <--

--> local GITEA-ACTIONS-TASK-84_WORKFLOW-Deploy-Jekyll-site_JOB-build-env <--

local act-toolcache

local blog_staging

If I do not extract the build artifacts from the action run, they will be thrown away together with the action run when it has completed and everything is gone. The runner’s volume set up above doesn’t touch the action as it again executes in an isolated image.

To really understand this has cost me a lot of time and many failing action runs. Don’t repeat my mistakes and read the according section in Gitea’s documentation thoroughly.

Well, this turns out more complicated than I thought. As a single maintainer of my website, this is like taking a sledgehammer to crack a nut. Anyways, I’ll follow through.

But I can use upload-artifact, right?

Right? Well, at least I thought so. I modified my Action script accordingly and used the upload function from the Github Actions marketplace:

# workflows/jekyll-build-action.yml

# [...]

# Automatically upload the build folder to Giteas blog repo folder

- name: --- UPLOAD ARTIFACT ---

uses: actions/upload-artifact@v3

with:

path: /workspace/schallbert/blog/

name: Blog_Staging

retention-days: 2

Great, now I have a downloadable Zip file on Gitea’s web surface:

But how do I now get that downloaded to the server’s disk? The action is fully encapsuled and cannot even access its underlying Docker Daemon (for good reasons, I guess).

So I search for the assets in the Gitea-Container that persists the artifacts at/data/gitea/actions_artifacts/BUILD_ID. Unfortunately, they are a heap (hundreds) of .chunk.gz files with cryptic numbers as name where I don’t know how to merge into one single archive.

And there’s another thing I don’t like about upload-artifact: My website is becoming bigger and bigger due to an increasing amount of media that I use. That’s why the uploader requires nearly two minutes for compressing and packing - with a linear ascending outlook.

Dead end.

Also Docker cp won’t work here

Then I try using Docker’s copy operation to get the artifacts to my host machine:

# workflows/jekyll-build-action.yml

# [...]

- name: --- COPY ARTIFACT ---

run: docker cp gitea-runner-1:/workspace/schallbert/blog /tmp/blog_staging

This fails like so:

gitea-runner-1 | docker cp gitea-runner-1:/workspace/schallbert/blog /tmp/blog_staging

[...]

gitea-runner-1 | [Deploy Jekyll site/build] | /var/run/act/workflow/3.sh: line 2: docker: not found

gitea-runner-1 | [Deploy Jekyll site/build] ❌ Failure - Main --- COPY ARTIFACT ---

This was expected as the action container knows no Docker. I cannot move files from “inside” the container to another one.

Or can I? By forwarding the host machine’s Daemon docker.sock, according to Gitea documentation, I might be tempted… No, I will not continue here - see above, I’d puncture encapsulation if I did.

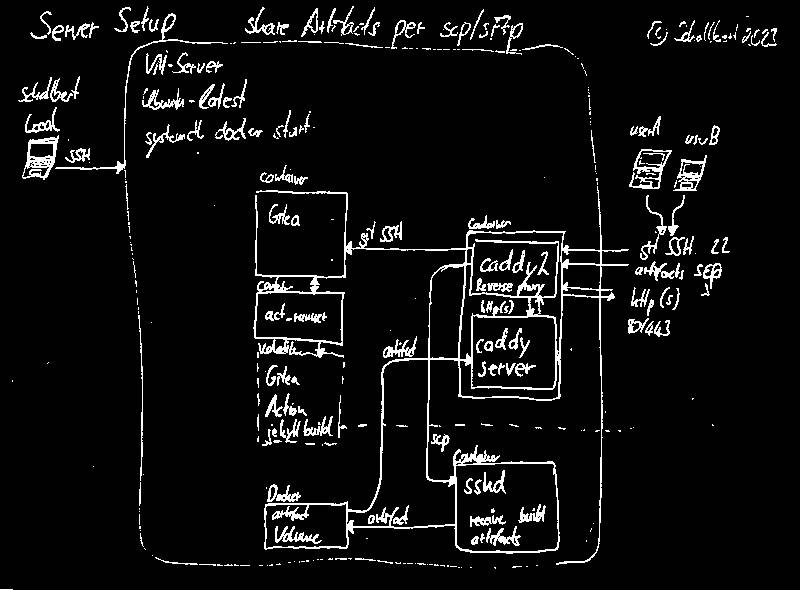

Transfer artifacts via SFTP

I’m running low on options. I think I could copy the artifacts via SFTP from within the act_runner’s action image into the Webserver-Container1.

Luckily, there already is an Action for this: scp-files. Now I have to modify the webserver’s container in a way to be accessible via SCP, inhale an SSH-key, and save incoming artifacts.

On the other hand, people in multiple forums say that this is not a clean solution. “Ac container should only accommodate one application. But there’s a solution again: A docker container with only the SSH-Daemon installed. If I have a shared volume between that one and my server, I might be successful.

Looks like more work still. Another container, piping files out and back into my system - that just doesn’t seem right. So I continue my research.

Handover a volume to the Action-Container

All these failures and dead ends have been with me for over a month now. I still really want to be able to build, test and publish automatically. I’ve learned a lot along the way and now I’m hoping that this approach will finally get me to the solution.

During my search I came across the option to change the configuration of the runner. That way, I might end up including a volume that I can share between the action and my server via the daemon on the host.

If that works, I wouldn’t have an additional security risk like a publicly available file transfer container or the long waiting times caused by upload-artifact. So let’s get to work! There are more reasons why volumes can make sense in the respective action - so there must already be people out there who have managed this.

So I add a volume to the Action script:

# workflows/jekyll-build-action.yml

# [...]

container:

image: jvconseil/jekyll-docker:latest

volumes:

- /tmp/blog_staging:/blog_staging

- /opt/cache:/opt/hostedtoolcache

But the artifacts still don’t show up on my host’s filesystem. I strip down the action as much as I can to just check if the volume creates a folder in the action container:

# workflows/jekyll-build-action.yml

# [...]

steps:

- name: --- CHECK_VOLUME ---

run: |

ls -al /blog_staging

But still:

gitea-runner-1 | [Deploy Jekyll site/build] [DEBUG] Working directory '/workspace/schallbert/blog'

gitea-runner-1 | [Deploy Jekyll site/build] | ls: /blog_staging: No such file or directory

gitea-runner-1 | [Deploy Jekyll site/build] ❌ Failure - Main --- CHECK_VOLUME ---

Some more, fruitless tries (maybe I had misspelled anything?) and extensive research in multiple forums I found out that there is a valid_volumes attribute in act. If I don’t have the volume added here, it won’t bind to the container.

OK, let’s add it to the action script:

# jekyll-build-action.yml

# [...]

container:

image: jvconseil/jekyll-docker:latest

valid_volumes:

- '**' # This does not work. Also specialized lists indicating the volumes directly won't work

volumes:

- /tmp/blog_staging:/blog_staging

- /opt/cache:/opt/hostedtoolcache

Still nothing. At least, now, I get a warning in the logs:

gitea-runner-1 | [Deploy Jekyll site/build] [/tmp/blog_staging] is not a valid volume, will be ignored

gitea-runner-1 | [Deploy Jekyll site/build] [/opt/cache] is not a valid volume, will be ignored

Something is wrong with the transfer of this option. There are reports in which the registration of the volumes works. So I take the instructions and create a configuration file where I enter the following:

# runner/config.yml

# Volumes (including bind mounts) can be mounted to containers. Glob syntax is supported, see https://github.com/gobwas/glob

# You can specify multiple volumes. If the sequence is empty, no volumes can be mounted.

# For example, if you only allow containers to mount the `data` volume and all the json files in `/src`, you should change the config to:

# valid_volumes:

# - data

# - /src/*.json

# If you want to allow any volume, please use the following configuration:

# valid_volumes:

# - '**'

valid_volumes: ["/tmp/blog_staging", "/opt/hostedtoolcache"]

Important: The notation must be a string, comma-separated, and always has to specify the Source of the volume. In other words, the part that comes before the :.

However, the error message “not a valid volume” still appears when building.

But then I realize that the config must be made available to the act_runner itself as a volume - otherwise the runner running in the container cannot access it at all!

So my docker-compose.yml for Gitea, section “runner” now looks like this:

# gitea/docker-compose.yml

# [...]

runner:

image: gitea/act_runner:latest

environment:

- CONFIG_FILE=/config.yml

- GITEA_INSTANCE_URL=https://git.schallbert.de

- GITEA_RUNNER_NAME=ichlaufe

- GITEA_RUNNER_REGISTRATION_TOKEN= <redacted>

volumes:

- ./runner/config.yml:/config.yml

- ./runner/data:/data

- /opt/hostedtoolcache:/opt/hostedtoolcache

- /var/run/docker.sock:/var/run/docker.sock

And, finally:

gitea-runner-1 | ls -al /blog_staging

gitea-runner-1 | [Deploy Jekyll site/build] | total 8

gitea-runner-1 | [Deploy Jekyll site/build] | drwxr-xr-x 2 root root 4096 Dec 27 07:32 .

gitea-runner-1 | [Deploy Jekyll site/build] | drwxr-xr-x 1 root root 4096 Dec 27 07:46 ..

gitea-runner-1 | [Deploy Jekyll site/build] ✅ Success - Main --- CHECK_VOLUME ---

What an act. I’m so glad that everything is running smoothly now and that I can actually find the build files on my local host system! 🥳

One last tip

If you work with a reverse proxy like I do and get strange connection refused error messages when starting up the runner like:

docker compose up

[+] Running 2/0

✔ Container gitea Created 0.0s

✔ Container gitea-runner-1 Created 0.0s

Attaching to gitea, gitea-runner-1

gitea-runner-1 | level=info msg="Starting runner daemon"

gitea-runner-1 | level=error msg="fail to invoke Declare" error="unavailable: dial tcp <address>: connect: connection refused"

gitea-runner-1 | Error: unavailable: dial tcp <address> connect: connection refused

gitea | Server listening on :: port 22.

gitea-runner-1 exited with code 1

Then your reverse proxy is either not correctly configured or - like in my case - shut down.

-

Using SFTP (Secure File Transfer Protocol) which is file sharing per ssh (secure shell), I’d increase attack surface of my system just a bit. This is because the SSH client would be reachable for anyone and not just for my action. ↩